I've worked on various environments over the years, bare metals and cloud. I'm not a huge fan of counting data and numbers. In fact, the entire whitepaper (muh scientific) industry is a fraud.

Basically, any time some scammer comes along, like AWS, they tout you all the imaginary advantages of the cloud:

- Scalability

- Flexibility

- Cost efficiency

And it looks modern, shiny, so for most people logic automatically turns off. People start loving cloud and they start hating on premise hardware.

And any suggestion that running your own bare metals might actually be superior solution in all ways will inevitably meet resistance, like:

- Your experience is anecdotical

- Everyone's doing it (no one fired for buying IBM)

- There's no yet wide data available to suggest cloud is inferior

It is extra annoying when people try to hide behind science, like "this is not researched enough".

So, for some reason, to go into the cloud you can go based on feelings, emotions, illogical reasoning and you need to collect data points and perform science to then convince yourself to get out of the cloud?

I knew AWS is a fraud by the time I got my first ever bill and I compared it to the costs of the normal hosting. I didn't need whitepapers. Just for fun I made Eden Platform to work with AWS, but I'd never suggest to run that setup, because AWS will simply drain you on egress bandwidth costs, even if you run only few servers there for quorum. Because, even if all the heavy lifting of monitoring is performed in bare metals, we still want to have metrics of our piece of trash nodes which run on AWS so AWS will still need to send some data out because we monitor AWS nodes in Eden platform.

And I see slowly the fraud unfolding before everyone, and by the time historical fraud of biblical proportions is committed, and someone crunches the data (assuming it will not get censored away) and finally when whitepapers after decades will start saying the truth, that yes, indeed cloud is utterly cost ineffective and doesn't make any sense AWS will already have accumulated unbelievable profits and the next fad will start.

Imagine, if you see some old pedophile giving candies to children, and you can't do anything unless the pedophile already victimizes the child? This is cloud industry, which will kill so many startups with its costs alone and only years later the reality will become mainstream.

Let's discuss party lines of the cloud vendors and deconstruct them one by one:

Cloud is cost effective

Nothing could be further from the truth. In fact, if you're talking about AWS and you're talking about cost you're an idiot already. Basically, I computed the infrastructure cost that I have, of beefy bare metals, to which I pay 200 dollars for hosting and my local datacenter to which I pay 100 dollars for electricity.

I calculated the amount of cores and gigabytes of memory that I have versus that infrastructure that we had.

For CPU cores AWS was 56.17$ per core, and 13.95$ per gigabyte of memory per month. My infrastructure is 2$ per core and 0.27$ per gigabyte of memory per month.

So, the ratios between AWS cost are:

- 28x more expensive in AWS for cores

- 51x more expensive in AWS for memory

Now, the craziest part is... The AWS setup is cost optimized, it has sophisticated system to run mainly spot instances for most workloads, there was a bunch of other work to reduce costs as well performed, and with all this work the most optimistic ratio is 28x for cores?..

And some idiot managers in the meetings say "there's no economy of scale advantage yet to run our own hardware". That's what happens when you get clueless managers that never probably replaced hard disk in their lifetime dictating business decisions.

We can scale instantly with a cloud

Yes you can. Now, let's use some of our brains, look at this one "anecdotal" cost difference ratio of 28x and think about something.

If we just rented bare metal servers, how much more hardware we could get if cost ratio is 28x for the same price? 28x more cores. 51x more memory.

How about this scaling strategy: we just buy 10x more hardware than we need with bare metals, don't give a crap about scaling at all for a while, and we're roughly still 3x cheaper than AWS?

Or should we run some virtualized garbage, barely breathing services in tiny VMs and just keep using hardware at 28x cost after spending effort to optimize it to the best of our ability?

Automatic updates and security patches

Since I'm running NixOS I only do updates once couple years or so. Some people might say software is too old, I'm like, whatever. If some breach is serious, like openssl heartbleed then you'll hear about it. Most of what people consider "security" issues are imaginary and in their head.

Are your servers only accessible through SSH keys you own?

Are your private connections secured through Wireguard VPN?

Are your ZFS datasets encrypted and all secrets in memory, in case someone tries to boot server through installer USB stick or CD?

Is swap disabled on your servers?

I can answer yes to all of these, so, for me, patching just because there's a new version - I don't care. I'm building products most of the time these days and Eden platform lets me focus on that.

In fact, I think I can write other post, that the update procedure itself is a literal attack vector instead - everyone is being told update update update ASAP. I'll let everyone else update with fresh code, test it out for breaches in production, hear stories about how they were hacked and latest data breaches and then decide to update or not and to which version.

So, again, this is a non issue, and if you'll run your virtual machines in the cloud (you 99% will) you'll be responsible for updating the OS anyway.

Faster time to market

I don't understand this argument at all from cloud proponents. Right now, I already own a lot of hardware for me, my servers are almost idle. As long as I'm not doing some business which would require training/inferring AI models (that's just a modest share of 99% businesses out there that actually make money and not just blindly splash VC funds to the left and right) I can build hundreds of different website ideas on the same servers until I code website or service that is used by enough customers that I'd need to expand to more servers. Once I've built Eden platform I'm spending most of the time building new businesses. Sometimes I need to replace a disk, but my servers keep running because ZFS makes disk failure undetectable to applications.

Managed services

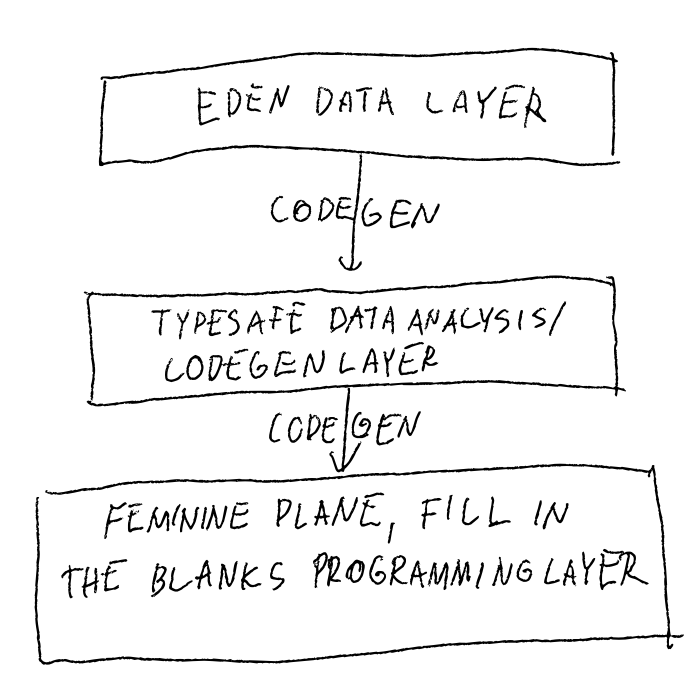

In Eden platform I have managed:

- HA postgres

- HA S3 (MinIO)

- HA queue system (NATS)

Everything is setup in a highly available manner and compile time error will tell me that, say, I only have 2 NATS instances if 3 are needed for Jetstream quorum.

If I don't like anything about the managed service, I own it and can change it, make more things configurable and so on. I could even fork the underlying components if I wanted. Good luck to do the same with cloud services.

Better customer experience

This point makes me really pissed. As having been on the side of both trenches I can confidently say that managing even thousands of bare metal services is simpler than dealing with cloud bullshit.

I only remember something magical few times, like new batch of servers shutting down within 24h, but we did Linux update and the issue was gone. Other than that, it is much more easy to debug once you actually own your own hardware. I know physical limits of my network ports and switches, just put monitoring on if they're reached, server/hard drive temperatures and if you're running NixOS nothing really unpredictable will happen. Once I worked out initial issues in my infrastructure, adjusting ventilation for good server temperature I forgot I have these servers. They're just running. Sometimes there's electricity loss but I have monitoring for that, I know when to reload in memory secrets and my systems are highly available - any DC or server can go down and my projects still work.

Now let me talk about some of the biggest pain points I've been dealing with, and the root cause is... the goddamn cloud

- Some component is alerting, running unreliably. Root cause? Its running on spot instance to "save costs" and it gets often shut down because AWS is taking our spot instance from us.

In my infrastructure this will never happen. My servers go down very rarely and jobs are rescheduled to run somewhere else. Even a shitty, unreliable software will run a lot better if it os not shutdown hundreds of times per year because we run on spot instances. And even spot instances don't deliver any real cost savings we could get from bare metals anyway, so what was the point of running it in the cloud?

- Magical connection disconnects for one service. Root cause? Some AWS bullshit limit was hit.

Of course, we don't own networking of AWS. We can't easily monitor it. Our network is doing who knows what. It amazes me that open source people would move heaven and earth not to run some proprietary software on their servers and proprietary software components are becoming extinct. But for some reason, it is okay to host in the cloud, where now you need to face myriad of magical networking problems because you don't have access to their network? How is this a thing? In hetzner your servers just use the VLAN and you configure it. That's it, as long as you're below the limits of the switch and the network card, which you can benchmark with iperf you'll be good. No magic bullshit because this AWS instances happen to have specific stuff about networking or whatever.

- Server becomes unresponsive. Root cause? AWS instance reached memory limits.

You know what happens on bare metals when this happens? Just process gets killed, I get an alert that process is killed. Most of the developers are aware of this issue. Their server doesn't become unresponsive.

- Docker container doesn't start. Root cause? It was built on a newer server with newer x86_64 instruction set and our AWS server was ancient.

I'll be fair, this could happen in old bare metals. But why I bring this point to attention is, Jeff Bezos knows that every server is valuable. They'll never decomission old severs as long as there's any life in them. Think about this, if you saw an ancient server from 15 years ago you'd say "I don't want it, its old". But if the same dusty ancient server ends up as one of your instances - it is still good for Bezos to give it to you as a VM! How in the world does that make sense?

- K8S Pod doesn't start on a node. Root cause? Not enough elastic network interfaces on a node

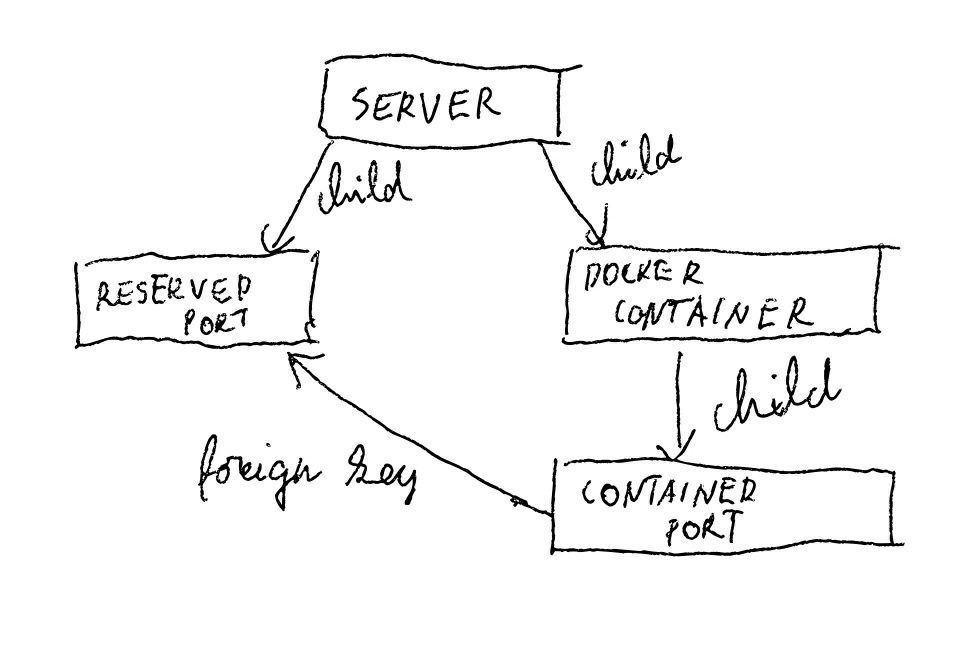

Again, will never happen in Eden platform. Our networking is extremely simple and doesn't need bandaid services running besides the ancient routing protocols running the entire internet, written by old graybeards that were designed well before even AWS was a thing. Every server in Eden platform has its own ip and containers use host networking for maximum efficiency. Caveat is, for every service you have to pick unique ports. What happens if I pick duplicate port? Its a compile time error in Eden platform. I can even code with OCaml to automatically pick free ports like DNS names. Much superior to having ENI exhaustion alerts and debugging issues in the middle of the night...

Basically, all the worst magical crazy bullshit issues that I've had - most of it was in the cloud environment. If I'm doing NixOS on bare metals I'm learning about Linux and it runs on most of the machines on the face of this earth. If I'm debugging AWS networking I'm just learning about meaningless AWS garbage that might just be a passing fad.

In conclusion

AWS is an amazing business model:

- You pay a shit ton more for their services

- You get to debug all the bullshit problems with their infrastructure and prevent them on your own

- You're the one blamed in case things stop working.

AWS is like a pedophile that always gets away easily by blaming its victims for everything and is motivated to perform more and more crime without anyone to stop it. Amazing customer experience!

So, I guess at this point, there's clearly not enough evidence to prove that cloud is an absolute trash and no whitepapers are written and this is a pseudoscience. But of course, at the end of the cloud era there will be people who knew which was better without whitepapers and there will be the blind followers who will take in all the damage until the mainstream viewpoints have shifted.

Good luck with your AWS account, whitepaper faggots!

And the rest of you have a good day

Basically, any time some scammer comes along, like AWS, they tout you all the imaginary advantages of the cloud:

- Scalability

- Flexibility

- Cost efficiency

And it looks modern, shiny, so for most people logic automatically turns off. People start loving cloud and they start hating on premise hardware.

And any suggestion that running your own bare metals might actually be superior solution in all ways will inevitably meet resistance, like:

- Your experience is anecdotical

- Everyone's doing it (no one fired for buying IBM)

- There's no yet wide data available to suggest cloud is inferior

It is extra annoying when people try to hide behind science, like "this is not researched enough".

So, for some reason, to go into the cloud you can go based on feelings, emotions, illogical reasoning and you need to collect data points and perform science to then convince yourself to get out of the cloud?

I knew AWS is a fraud by the time I got my first ever bill and I compared it to the costs of the normal hosting. I didn't need whitepapers. Just for fun I made Eden Platform to work with AWS, but I'd never suggest to run that setup, because AWS will simply drain you on egress bandwidth costs, even if you run only few servers there for quorum. Because, even if all the heavy lifting of monitoring is performed in bare metals, we still want to have metrics of our piece of trash nodes which run on AWS so AWS will still need to send some data out because we monitor AWS nodes in Eden platform.

And I see slowly the fraud unfolding before everyone, and by the time historical fraud of biblical proportions is committed, and someone crunches the data (assuming it will not get censored away) and finally when whitepapers after decades will start saying the truth, that yes, indeed cloud is utterly cost ineffective and doesn't make any sense AWS will already have accumulated unbelievable profits and the next fad will start.

Imagine, if you see some old pedophile giving candies to children, and you can't do anything unless the pedophile already victimizes the child? This is cloud industry, which will kill so many startups with its costs alone and only years later the reality will become mainstream.

Let's discuss party lines of the cloud vendors and deconstruct them one by one:

Cloud is cost effective

Nothing could be further from the truth. In fact, if you're talking about AWS and you're talking about cost you're an idiot already. Basically, I computed the infrastructure cost that I have, of beefy bare metals, to which I pay 200 dollars for hosting and my local datacenter to which I pay 100 dollars for electricity.

I calculated the amount of cores and gigabytes of memory that I have versus that infrastructure that we had.

For CPU cores AWS was 56.17$ per core, and 13.95$ per gigabyte of memory per month. My infrastructure is 2$ per core and 0.27$ per gigabyte of memory per month.

So, the ratios between AWS cost are:

- 28x more expensive in AWS for cores

- 51x more expensive in AWS for memory

Now, the craziest part is... The AWS setup is cost optimized, it has sophisticated system to run mainly spot instances for most workloads, there was a bunch of other work to reduce costs as well performed, and with all this work the most optimistic ratio is 28x for cores?..

And some idiot managers in the meetings say "there's no economy of scale advantage yet to run our own hardware". That's what happens when you get clueless managers that never probably replaced hard disk in their lifetime dictating business decisions.

We can scale instantly with a cloud

Yes you can. Now, let's use some of our brains, look at this one "anecdotal" cost difference ratio of 28x and think about something.

If we just rented bare metal servers, how much more hardware we could get if cost ratio is 28x for the same price? 28x more cores. 51x more memory.

How about this scaling strategy: we just buy 10x more hardware than we need with bare metals, don't give a crap about scaling at all for a while, and we're roughly still 3x cheaper than AWS?

Or should we run some virtualized garbage, barely breathing services in tiny VMs and just keep using hardware at 28x cost after spending effort to optimize it to the best of our ability?

Automatic updates and security patches

Since I'm running NixOS I only do updates once couple years or so. Some people might say software is too old, I'm like, whatever. If some breach is serious, like openssl heartbleed then you'll hear about it. Most of what people consider "security" issues are imaginary and in their head.

Are your servers only accessible through SSH keys you own?

Are your private connections secured through Wireguard VPN?

Are your ZFS datasets encrypted and all secrets in memory, in case someone tries to boot server through installer USB stick or CD?

Is swap disabled on your servers?

I can answer yes to all of these, so, for me, patching just because there's a new version - I don't care. I'm building products most of the time these days and Eden platform lets me focus on that.

In fact, I think I can write other post, that the update procedure itself is a literal attack vector instead - everyone is being told update update update ASAP. I'll let everyone else update with fresh code, test it out for breaches in production, hear stories about how they were hacked and latest data breaches and then decide to update or not and to which version.

So, again, this is a non issue, and if you'll run your virtual machines in the cloud (you 99% will) you'll be responsible for updating the OS anyway.

Faster time to market

I don't understand this argument at all from cloud proponents. Right now, I already own a lot of hardware for me, my servers are almost idle. As long as I'm not doing some business which would require training/inferring AI models (that's just a modest share of 99% businesses out there that actually make money and not just blindly splash VC funds to the left and right) I can build hundreds of different website ideas on the same servers until I code website or service that is used by enough customers that I'd need to expand to more servers. Once I've built Eden platform I'm spending most of the time building new businesses. Sometimes I need to replace a disk, but my servers keep running because ZFS makes disk failure undetectable to applications.

Managed services

In Eden platform I have managed:

- HA postgres

- HA S3 (MinIO)

- HA queue system (NATS)

Everything is setup in a highly available manner and compile time error will tell me that, say, I only have 2 NATS instances if 3 are needed for Jetstream quorum.

If I don't like anything about the managed service, I own it and can change it, make more things configurable and so on. I could even fork the underlying components if I wanted. Good luck to do the same with cloud services.

Better customer experience

This point makes me really pissed. As having been on the side of both trenches I can confidently say that managing even thousands of bare metal services is simpler than dealing with cloud bullshit.

I only remember something magical few times, like new batch of servers shutting down within 24h, but we did Linux update and the issue was gone. Other than that, it is much more easy to debug once you actually own your own hardware. I know physical limits of my network ports and switches, just put monitoring on if they're reached, server/hard drive temperatures and if you're running NixOS nothing really unpredictable will happen. Once I worked out initial issues in my infrastructure, adjusting ventilation for good server temperature I forgot I have these servers. They're just running. Sometimes there's electricity loss but I have monitoring for that, I know when to reload in memory secrets and my systems are highly available - any DC or server can go down and my projects still work.

Now let me talk about some of the biggest pain points I've been dealing with, and the root cause is... the goddamn cloud

- Some component is alerting, running unreliably. Root cause? Its running on spot instance to "save costs" and it gets often shut down because AWS is taking our spot instance from us.

In my infrastructure this will never happen. My servers go down very rarely and jobs are rescheduled to run somewhere else. Even a shitty, unreliable software will run a lot better if it os not shutdown hundreds of times per year because we run on spot instances. And even spot instances don't deliver any real cost savings we could get from bare metals anyway, so what was the point of running it in the cloud?

- Magical connection disconnects for one service. Root cause? Some AWS bullshit limit was hit.

Of course, we don't own networking of AWS. We can't easily monitor it. Our network is doing who knows what. It amazes me that open source people would move heaven and earth not to run some proprietary software on their servers and proprietary software components are becoming extinct. But for some reason, it is okay to host in the cloud, where now you need to face myriad of magical networking problems because you don't have access to their network? How is this a thing? In hetzner your servers just use the VLAN and you configure it. That's it, as long as you're below the limits of the switch and the network card, which you can benchmark with iperf you'll be good. No magic bullshit because this AWS instances happen to have specific stuff about networking or whatever.

- Server becomes unresponsive. Root cause? AWS instance reached memory limits.

You know what happens on bare metals when this happens? Just process gets killed, I get an alert that process is killed. Most of the developers are aware of this issue. Their server doesn't become unresponsive.

- Docker container doesn't start. Root cause? It was built on a newer server with newer x86_64 instruction set and our AWS server was ancient.

I'll be fair, this could happen in old bare metals. But why I bring this point to attention is, Jeff Bezos knows that every server is valuable. They'll never decomission old severs as long as there's any life in them. Think about this, if you saw an ancient server from 15 years ago you'd say "I don't want it, its old". But if the same dusty ancient server ends up as one of your instances - it is still good for Bezos to give it to you as a VM! How in the world does that make sense?

- K8S Pod doesn't start on a node. Root cause? Not enough elastic network interfaces on a node

Again, will never happen in Eden platform. Our networking is extremely simple and doesn't need bandaid services running besides the ancient routing protocols running the entire internet, written by old graybeards that were designed well before even AWS was a thing. Every server in Eden platform has its own ip and containers use host networking for maximum efficiency. Caveat is, for every service you have to pick unique ports. What happens if I pick duplicate port? Its a compile time error in Eden platform. I can even code with OCaml to automatically pick free ports like DNS names. Much superior to having ENI exhaustion alerts and debugging issues in the middle of the night...

Basically, all the worst magical crazy bullshit issues that I've had - most of it was in the cloud environment. If I'm doing NixOS on bare metals I'm learning about Linux and it runs on most of the machines on the face of this earth. If I'm debugging AWS networking I'm just learning about meaningless AWS garbage that might just be a passing fad.

In conclusion

AWS is an amazing business model:

- You pay a shit ton more for their services

- You get to debug all the bullshit problems with their infrastructure and prevent them on your own

- You're the one blamed in case things stop working.

AWS is like a pedophile that always gets away easily by blaming its victims for everything and is motivated to perform more and more crime without anyone to stop it. Amazing customer experience!

So, I guess at this point, there's clearly not enough evidence to prove that cloud is an absolute trash and no whitepapers are written and this is a pseudoscience. But of course, at the end of the cloud era there will be people who knew which was better without whitepapers and there will be the blind followers who will take in all the damage until the mainstream viewpoints have shifted.

Good luck with your AWS account, whitepaper faggots!

And the rest of you have a good day