Today I will talk about masculinity and how it is necessarily related to divinity. After all, I've discussed in masculine and feminine planes thesis how the concept of sexes can explode individual developer's productivity at the right place and at the right time.

First of all, let's start that God created man in the image of God:

So God created man in his own image, in the image of God created he him; male and female created he them. - Genesis 1:27

And it says that man was created in the image of God, but not a woman. Okay, how to leverage this? Think of having a son and you really like software development. Wouldn't you be very proud of your son if you taught him to code like you do and he also became a rockstar developer? That would be a very good feeling for you. So, you'd appreciate if someone followed you in your footsteps, you'd be happy that someone resembles the image of you in their young age. You'd want to reward such son of following your footsteps. You could answer all his questions about coding. But, if your son deviates from you, and becomes someone like mechanic or a street cleaner, that would be fine but wouldn't be as ideal. You couldn't help them much in their specialty if it deviates from you.

So, in the same way there exist men that are all over the spectrum in resembling the image of God. At the very beginning of the spectrum you get feminine, weakling faggitty sissies. At the end of the spectrum you have someone like king Nebuchadnezzar, who brought the entire world to his knees.

The tree that thou sawest, which grew, and was strong, whose height reached unto the heaven, and the sight thereof to all the earth; Whose leaves were fair, and the fruit thereof much, and in it was meat for all; under which the beasts of the field dwelt, and upon whose branches the fowls of the heaven had their habitation: It is thou, O king, that art grown and become strong: for thy greatness is grown, and reacheth unto heaven, and thy dominion to the end of the earth. - Daniel 4:20-22

Just like God is the ruler of the heaven and earth, needless to say, man will always be lower than God in control but a man can still be a ruler within his limited scope.

Divine attributes

So, man's attributes determine how close he is to the image of God. Let's see some of the divine attributes of the Lord:

And the LORD brought us forth out of Egypt with a mighty hand, and with an outstretched arm, and with great terribleness, and with signs, and with wonders: - Deuteronomy 26:8

1. God is infinitely strong and mighty

O LORD, how manifold are thy works! in wisdom hast thou made them all: the earth is full of thy riches. - Psalms 104:24

To him that by wisdom made the heavens: for his mercy endureth for ever. - Psalms 136:5

2. God is infinitely wise

One thing have I desired of the LORD, that will I seek after; that I may dwell in the house of the LORD all the days of my life, to behold the beauty of the LORD, and to enquire in his temple. - Psalms 27:4

3. God is infinitely beautiful

And the LORD passed by before him, and proclaimed, The LORD, The LORD God, merciful and gracious, longsuffering, and abundant in goodness and truth, - Exodus 34:6

4. God is merciful

And I saw heaven opened, and behold a white horse; and he that sat upon him was called Faithful and True, and in righteousness he doth judge and make war. - Revelation 19:11

5. God is just and can make war

But the hour cometh, and now is, when the true worshippers shall worship the Father in spirit and in truth: for the Father seeketh such to worship him. - John 4:23

6. LORD seeks people that will worship him

And he hath on his vesture and on his thigh a name written, KING OF KINGS, AND LORD OF LORDS. - Revelation 19:16

7. LORD is the ultimate ruler

As a man if you implement God's attributes you will be rewarded with love of women and offspring of a traditional family.

For instance, if you are low in the looks department, you will never fully resemble the perfect image of God, but you can compensate by getting jacked in the gym. Also, women drool over intelligent men and praise them for that. Woman will choose to be with a man that is worthy of worship and will most closely resemble the image of God. That's why they try to get the ultimate best mate that they can, and men only look for a few things in woman. Sure, a bad boy may have only trait of being beautiful and ruling to a woman, and woman will naturally fall for that. But ultimately, bad boys generally don't create strong traditional families, for that ideally you need full package of the image of God and to be aware of all those traits to create a stable traditional family. Needless to say, you need wisdom to make money and not be dumpster diving. You need to be ruler of your family to prevent stupid nonsense like daughters wasting their precious virginity in drunk one night stand. You need to dispense judgement and chastise children correctly so they would get a sense of righteousness in a family. You also need to be in control of your woman so she would be happy, otherwise she will not consider you the lord worthy of worship and might run away to other men.

And the reward is the offspring, like promised to Abraham:

And I will make thy seed as the dust of the earth: so that if a man can number the dust of the earth, then shall thy seed also be numbered. - Genesis 13:16

As arrows are in the hand of a mighty man; so are children of the youth. Happy is the man that hath his quiver full of them: they shall not be ashamed, but they shall speak with the enemies in the gate. - Psalms 127:4-5

The entire red pill movement is based on what they say treating women like crap. The underlying reason is not that women need to be treated like crap all the time. The underlying reason why women are sexually attracted to that is that women know they can't be right, they are not as blessed in wisdom and understanding as men and they seek a man who can set them straight. A man determines rules for a woman, they can be evil or good but the women will follow them because they are wired to respond to judgement and shaming from her lord. Just like man ought to be drawn to worship someone who knows everything and understands everything, like the LORD.

The hatred of the image of God in the world

Now, the leftist cucks, the vast majority of the contingent of the software developers today despise the LORD and the image of God. They despise that women are not equal to men. They despise masculine figures that act like God, with might and judgement, i.e. Donald Trump. These faglets also despise the gym and cannot admit that women are attracted to strong muscular men. These faglets also despise wisdom and have none, are ever learning about worthless concepts but cannot come the truth, reality of the pattern and masculine and feminine planes:

Ever learning, and never able to come to the knowledge of the truth. - 2 Timothy 3:7

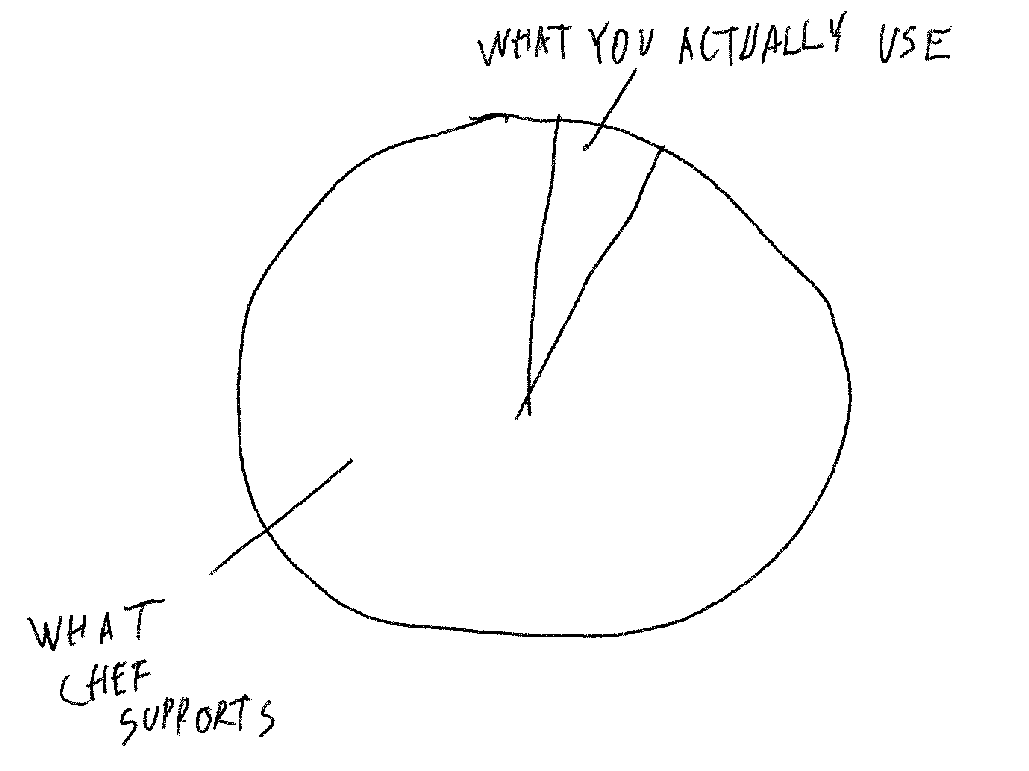

These are the fagglets who put rings on fingers of used up sluts when they squander all their youth and beauty on men who respect them the least. These are the cucks whose wifes cheat on them and they're the vast majority of the 30% of fathers globally who raise not their own children. That's why I'm so harsh to these people. These people are ignorant turd flinging monkeys. They cannot comprehend nor appreciate the divine design and its perfection. They neither are capable of engineering anything decent themselves neither they can teach others the correct way. Look at all the overengineered worthless garbage that is in the landscape of CNCF https://landscape.cncf.io/ . Hundreds if not thousands of projects of absolute insanity, where 99% of problems could be solved in a single codebase with the pattern. Every so few years new fads come and leave, like microservices, kubernetes, golang etc. that, once people practically find out that stuff doesn't work this way either, after wasting millions of dollars in overengineered designs they need to either hire hundreds more engineers to maintain all that mess or they have to rewrite everything from scratch again. There is an utter confusion of how to build anything if you don't have experience and greenhorn devs will pick the newest hottest "state of the art" overcomplicated garbage to achieve their goals. And the contractors are living in the infinite gold mine of overcomplicated unnecessary work.

There can only be two divisions, good vs evil. There are only two directions, say, being weak and being strong. Wise and unwise. Strict rules and discipline or a mess. Typesafety or dynamic typing. To go towards good, people need to strive to move to the good direction. Men ought want to be strong, wise and disciplined. Women ought to want to be chaste and submissive. Anything that goes against that is evil and ought to be shamed and scorned to maintain good. Get understanding and hate every false way.

Through thy precepts I get understanding: therefore I hate every false way. - Psalms 119:104

First of all, let's start that God created man in the image of God:

So God created man in his own image, in the image of God created he him; male and female created he them. - Genesis 1:27

And it says that man was created in the image of God, but not a woman. Okay, how to leverage this? Think of having a son and you really like software development. Wouldn't you be very proud of your son if you taught him to code like you do and he also became a rockstar developer? That would be a very good feeling for you. So, you'd appreciate if someone followed you in your footsteps, you'd be happy that someone resembles the image of you in their young age. You'd want to reward such son of following your footsteps. You could answer all his questions about coding. But, if your son deviates from you, and becomes someone like mechanic or a street cleaner, that would be fine but wouldn't be as ideal. You couldn't help them much in their specialty if it deviates from you.

So, in the same way there exist men that are all over the spectrum in resembling the image of God. At the very beginning of the spectrum you get feminine, weakling faggitty sissies. At the end of the spectrum you have someone like king Nebuchadnezzar, who brought the entire world to his knees.

The tree that thou sawest, which grew, and was strong, whose height reached unto the heaven, and the sight thereof to all the earth; Whose leaves were fair, and the fruit thereof much, and in it was meat for all; under which the beasts of the field dwelt, and upon whose branches the fowls of the heaven had their habitation: It is thou, O king, that art grown and become strong: for thy greatness is grown, and reacheth unto heaven, and thy dominion to the end of the earth. - Daniel 4:20-22

Just like God is the ruler of the heaven and earth, needless to say, man will always be lower than God in control but a man can still be a ruler within his limited scope.

Divine attributes

So, man's attributes determine how close he is to the image of God. Let's see some of the divine attributes of the Lord:

And the LORD brought us forth out of Egypt with a mighty hand, and with an outstretched arm, and with great terribleness, and with signs, and with wonders: - Deuteronomy 26:8

1. God is infinitely strong and mighty

O LORD, how manifold are thy works! in wisdom hast thou made them all: the earth is full of thy riches. - Psalms 104:24

To him that by wisdom made the heavens: for his mercy endureth for ever. - Psalms 136:5

2. God is infinitely wise

One thing have I desired of the LORD, that will I seek after; that I may dwell in the house of the LORD all the days of my life, to behold the beauty of the LORD, and to enquire in his temple. - Psalms 27:4

3. God is infinitely beautiful

And the LORD passed by before him, and proclaimed, The LORD, The LORD God, merciful and gracious, longsuffering, and abundant in goodness and truth, - Exodus 34:6

4. God is merciful

And I saw heaven opened, and behold a white horse; and he that sat upon him was called Faithful and True, and in righteousness he doth judge and make war. - Revelation 19:11

5. God is just and can make war

But the hour cometh, and now is, when the true worshippers shall worship the Father in spirit and in truth: for the Father seeketh such to worship him. - John 4:23

6. LORD seeks people that will worship him

And he hath on his vesture and on his thigh a name written, KING OF KINGS, AND LORD OF LORDS. - Revelation 19:16

7. LORD is the ultimate ruler

As a man if you implement God's attributes you will be rewarded with love of women and offspring of a traditional family.

For instance, if you are low in the looks department, you will never fully resemble the perfect image of God, but you can compensate by getting jacked in the gym. Also, women drool over intelligent men and praise them for that. Woman will choose to be with a man that is worthy of worship and will most closely resemble the image of God. That's why they try to get the ultimate best mate that they can, and men only look for a few things in woman. Sure, a bad boy may have only trait of being beautiful and ruling to a woman, and woman will naturally fall for that. But ultimately, bad boys generally don't create strong traditional families, for that ideally you need full package of the image of God and to be aware of all those traits to create a stable traditional family. Needless to say, you need wisdom to make money and not be dumpster diving. You need to be ruler of your family to prevent stupid nonsense like daughters wasting their precious virginity in drunk one night stand. You need to dispense judgement and chastise children correctly so they would get a sense of righteousness in a family. You also need to be in control of your woman so she would be happy, otherwise she will not consider you the lord worthy of worship and might run away to other men.

And the reward is the offspring, like promised to Abraham:

And I will make thy seed as the dust of the earth: so that if a man can number the dust of the earth, then shall thy seed also be numbered. - Genesis 13:16

As arrows are in the hand of a mighty man; so are children of the youth. Happy is the man that hath his quiver full of them: they shall not be ashamed, but they shall speak with the enemies in the gate. - Psalms 127:4-5

The entire red pill movement is based on what they say treating women like crap. The underlying reason is not that women need to be treated like crap all the time. The underlying reason why women are sexually attracted to that is that women know they can't be right, they are not as blessed in wisdom and understanding as men and they seek a man who can set them straight. A man determines rules for a woman, they can be evil or good but the women will follow them because they are wired to respond to judgement and shaming from her lord. Just like man ought to be drawn to worship someone who knows everything and understands everything, like the LORD.

The hatred of the image of God in the world

Now, the leftist cucks, the vast majority of the contingent of the software developers today despise the LORD and the image of God. They despise that women are not equal to men. They despise masculine figures that act like God, with might and judgement, i.e. Donald Trump. These faglets also despise the gym and cannot admit that women are attracted to strong muscular men. These faglets also despise wisdom and have none, are ever learning about worthless concepts but cannot come the truth, reality of the pattern and masculine and feminine planes:

Ever learning, and never able to come to the knowledge of the truth. - 2 Timothy 3:7

These are the fagglets who put rings on fingers of used up sluts when they squander all their youth and beauty on men who respect them the least. These are the cucks whose wifes cheat on them and they're the vast majority of the 30% of fathers globally who raise not their own children. That's why I'm so harsh to these people. These people are ignorant turd flinging monkeys. They cannot comprehend nor appreciate the divine design and its perfection. They neither are capable of engineering anything decent themselves neither they can teach others the correct way. Look at all the overengineered worthless garbage that is in the landscape of CNCF https://landscape.cncf.io/ . Hundreds if not thousands of projects of absolute insanity, where 99% of problems could be solved in a single codebase with the pattern. Every so few years new fads come and leave, like microservices, kubernetes, golang etc. that, once people practically find out that stuff doesn't work this way either, after wasting millions of dollars in overengineered designs they need to either hire hundreds more engineers to maintain all that mess or they have to rewrite everything from scratch again. There is an utter confusion of how to build anything if you don't have experience and greenhorn devs will pick the newest hottest "state of the art" overcomplicated garbage to achieve their goals. And the contractors are living in the infinite gold mine of overcomplicated unnecessary work.

There can only be two divisions, good vs evil. There are only two directions, say, being weak and being strong. Wise and unwise. Strict rules and discipline or a mess. Typesafety or dynamic typing. To go towards good, people need to strive to move to the good direction. Men ought want to be strong, wise and disciplined. Women ought to want to be chaste and submissive. Anything that goes against that is evil and ought to be shamed and scorned to maintain good. Get understanding and hate every false way.

Through thy precepts I get understanding: therefore I hate every false way. - Psalms 119:104