- Welcome to My Coding Cult.

Recent posts

#31

My Rants / High IQ is overrated

Last post by CultLeader - July 04, 2021, 12:18:09 PMToday a lot of dumb people boast my IQ is so and so. They even make the fallacy of saying, since my IQ is so and so hence everything I say must be right. Good thing reality and correctness doesn't care about IQ and is not bothered with meaningless arguments from authority. Reality and real wisdom is easy to be understood, easy to be recognized. It doesn't need to be forced, people come to the conclusions of wisdom of their own free will for the benefit of themselves first and for the benefits of others. When you hear wisdom in reality you don't need PhD's to know when something is said is true, it just becomes obvious. With wisdom what you usually do is connect dots in the people's heads that they already have, sistemize their thought process and make it simpler. You do this until all complexity is eliminated and only the obvious parts that have to be there are left. That is wisdom.

Then came the officers to the chief priests and Pharisees; and they said unto them, Why have ye not brought him? The officers answered, Never man spake like this man. - John 7:45-46

Ordinary people without high education heard Jesus speak and he was bursting wisdom out of every word. They didn't need PhD's nor bible colleges to grasp what he said for the most part. Same thing said in the bible in other words in James:

But the wisdom that is from above is first pure, then peaceable, gentle, and easy to be intreated, full of mercy and good fruits, without partiality, and without hypocrisy. - James 3:17

Now let's talk about some of the dumbest, most pretentious intellectual midgets who somehow have audacity to perceive themselves as smart. That's right, I'm talking about good old leftist cucklets soy dripping software developers.

You see, a humble person that knows he's not that sharp is not a problem. You can reason with such people easily. But when an idiot perceives himself as being smart then it is much harder.

What am I talking about? For instance, in a daily life, someone made a PR where they used mutex to block access from two different threads. I left a comment "well, if you just order the code in such a way you don't need locks at all". Idiot merged anyway. And, of course, that person is perceived as smart in the team, had PhD in who knows what, I don't care. Yet the person, when presented with two options, one being use a mutex to synchronize access and another is just reorder code and you don't need locks at all - this person picked a more complex way to do things. Imagine that, a person has PhD and yet the same person is an imbecile.

And such people love overusing complex software development strategies, patterns, overengineer crap out of everything and yet these same people mock someone who works in a construction site or drives a truck as being dumb because they cannot code. Why are software developer salaries so high anyway and why are there so few of them? There is a big barrier of entry and indeed you need lots of smarts in the current climate to understand all the crazy complex mess that we ourselves have created in the first place. Imagine all the rotting Javascript in the world which soy dripping faggots debug day and night and will move heaven and earth to keep pushing abomination of NodeJS instead of some rock solid statically typed language. You see, the work of a construction worker is reduced to such simplicity with great wisdom so that very many people could join in up to speed. How long construction has been going on, for thousands of years? This timeframe has allowed for the craft of building to have all sorts of tools that will get the job done.

How looked the first house ever built? Probably like crap. And that person who built it (Adam?) likely made lots of mistakes. But that's fine, the more time passes the more practice and everything becomes easier. And of course, in the market of first houses there were very few skilled craftsmen who knew do's and dont's and probably charged a hefty premium to build someone a house, like software developers today. Eventually this workforce became cheaper.

Consider software development. How long it has been? 50 years at the time of this writing? We're in the very infancy of this craft, where first attempts bred insane complexity (by imbeciles who think they are smart) where there are very few people who could maintain it and understand it. And yet they brag about how all the garbage built is so complex that only few priests can maintain it, have much higher salaries than the rest of the people and then mock other people, who work in art that has been developed thousands of years, like construction, where everything was reduced to such simplicity and all do's and dont's are known and now understood. Some people work on software projects so long that they make a bets seeing an office across the street being built, that they will not finish this project until office gets built. And they're often right. These imbeciles cannot type with their keyboard in a wise way and make wise decisions to such extent that building with a cheaper workforce appears in front of their eyes. If that does not speak of the volumes of extreme stupidity of most software developers today I don't know what does.

Today, software development goes like this, if we used a building analogy: two people build separate walls without seeing each others work. Walls are of different heights, yet they found out about that only when trying to attach roof and it doesn't fit. This is finding out about issues in production. What is the remedy to this in house building? There is a model, a project, in which architects figure out in imaginary plane of how the house looks and model it. You can't just do all this in your head, like software architects do with diagrams and pray they work. A building, say, AutoCAD model checks if walls are of appropriate height and such problems are detected in AutoCAD so they wouldn't appear in production. And there is no question about what height wall should be, it is decided in the model. So when I look at mistakes of postmortems, like, say, database field is removed and it was used in frontend and now website doesn't work because of null pointer exception - this is pathetic. These people have no plan, no model where everything is worked out.

Such issues are worked out with the pattern, where one executable has everything related to it. We can check that database query uses indexes so it would never use sequential scans in production. We can check that query is never used if we have it as data in our plan. How fewer post mortems you would have if you checked such details before deployment and would enforce that such crooked buildings wouldn't bee deployed? 90%+ complexity I just remove with the pattern, by checking issues early and don't deal with them at all. The pattern allows you to build code like a building, you create foundations, then build more and more things on top and the code scales like a building. If there are cross context inconsistencies you know instantly instead of finding out about them in production. With the pattern you, as one developer, can do job of 100 developers and know everything will work together, hence, drastically reducing cost of the projects. Pattern is like having a building plan - todays imbecile developers build buildings without a plan! And Jira is not a plan, mkay?

And guess what? If you have well established meta executable it becomes trivial to develop with it. You need REST endpoint with the pattern? Add another row in the table of rest endpoints, define its signature, code gets generated to fill in the blank for the trivial typesafe implementation and you're done. And working in a feminine plane only you'll have much simpler job and hence, more people can do it, hence workforce for software development projects can be cheaper. And of course, leftist cuck wet dream or nightmare, more women can finally code in less understanding requiring feminine plane and get paid lower salary than few men who work in masculine plane which requires all the understanding of how everything works together, LMAO.

So this is why I don't view having a high IQ a very important thing. Once you start doing things with the pattern your life becomes so simple and trivial that you don't need to read whitepapers on how most hardcore locking primitives work. You just build abstractions that don't require them, because you see big picture earlier. That's why most leftist faglets, you can keep complaining about how women are attracted to masculine men and how nobody appreaciates your imaginary smarts in the complex abomination of a reality that you yourselves have created. And such leftist cucks will also not reproduce sufficiently in the long term and disappear from this earth themselves in due time. Complexity is not how nature works, it is simple, neither are we traveling around the sun (if you want a quick summary check this out https://www.youtube.com/watch?v=WffliCP2dU0 ), this is other thing pagan leftist sun worshipper cucks created in their mind to pervert and pollute mind about the complexities of nature which is designed simply and beautifully by the Master Builder of the bible.

Peace out bois.

Then came the officers to the chief priests and Pharisees; and they said unto them, Why have ye not brought him? The officers answered, Never man spake like this man. - John 7:45-46

Ordinary people without high education heard Jesus speak and he was bursting wisdom out of every word. They didn't need PhD's nor bible colleges to grasp what he said for the most part. Same thing said in the bible in other words in James:

But the wisdom that is from above is first pure, then peaceable, gentle, and easy to be intreated, full of mercy and good fruits, without partiality, and without hypocrisy. - James 3:17

Now let's talk about some of the dumbest, most pretentious intellectual midgets who somehow have audacity to perceive themselves as smart. That's right, I'm talking about good old leftist cucklets soy dripping software developers.

You see, a humble person that knows he's not that sharp is not a problem. You can reason with such people easily. But when an idiot perceives himself as being smart then it is much harder.

What am I talking about? For instance, in a daily life, someone made a PR where they used mutex to block access from two different threads. I left a comment "well, if you just order the code in such a way you don't need locks at all". Idiot merged anyway. And, of course, that person is perceived as smart in the team, had PhD in who knows what, I don't care. Yet the person, when presented with two options, one being use a mutex to synchronize access and another is just reorder code and you don't need locks at all - this person picked a more complex way to do things. Imagine that, a person has PhD and yet the same person is an imbecile.

And such people love overusing complex software development strategies, patterns, overengineer crap out of everything and yet these same people mock someone who works in a construction site or drives a truck as being dumb because they cannot code. Why are software developer salaries so high anyway and why are there so few of them? There is a big barrier of entry and indeed you need lots of smarts in the current climate to understand all the crazy complex mess that we ourselves have created in the first place. Imagine all the rotting Javascript in the world which soy dripping faggots debug day and night and will move heaven and earth to keep pushing abomination of NodeJS instead of some rock solid statically typed language. You see, the work of a construction worker is reduced to such simplicity with great wisdom so that very many people could join in up to speed. How long construction has been going on, for thousands of years? This timeframe has allowed for the craft of building to have all sorts of tools that will get the job done.

How looked the first house ever built? Probably like crap. And that person who built it (Adam?) likely made lots of mistakes. But that's fine, the more time passes the more practice and everything becomes easier. And of course, in the market of first houses there were very few skilled craftsmen who knew do's and dont's and probably charged a hefty premium to build someone a house, like software developers today. Eventually this workforce became cheaper.

Consider software development. How long it has been? 50 years at the time of this writing? We're in the very infancy of this craft, where first attempts bred insane complexity (by imbeciles who think they are smart) where there are very few people who could maintain it and understand it. And yet they brag about how all the garbage built is so complex that only few priests can maintain it, have much higher salaries than the rest of the people and then mock other people, who work in art that has been developed thousands of years, like construction, where everything was reduced to such simplicity and all do's and dont's are known and now understood. Some people work on software projects so long that they make a bets seeing an office across the street being built, that they will not finish this project until office gets built. And they're often right. These imbeciles cannot type with their keyboard in a wise way and make wise decisions to such extent that building with a cheaper workforce appears in front of their eyes. If that does not speak of the volumes of extreme stupidity of most software developers today I don't know what does.

Today, software development goes like this, if we used a building analogy: two people build separate walls without seeing each others work. Walls are of different heights, yet they found out about that only when trying to attach roof and it doesn't fit. This is finding out about issues in production. What is the remedy to this in house building? There is a model, a project, in which architects figure out in imaginary plane of how the house looks and model it. You can't just do all this in your head, like software architects do with diagrams and pray they work. A building, say, AutoCAD model checks if walls are of appropriate height and such problems are detected in AutoCAD so they wouldn't appear in production. And there is no question about what height wall should be, it is decided in the model. So when I look at mistakes of postmortems, like, say, database field is removed and it was used in frontend and now website doesn't work because of null pointer exception - this is pathetic. These people have no plan, no model where everything is worked out.

Such issues are worked out with the pattern, where one executable has everything related to it. We can check that database query uses indexes so it would never use sequential scans in production. We can check that query is never used if we have it as data in our plan. How fewer post mortems you would have if you checked such details before deployment and would enforce that such crooked buildings wouldn't bee deployed? 90%+ complexity I just remove with the pattern, by checking issues early and don't deal with them at all. The pattern allows you to build code like a building, you create foundations, then build more and more things on top and the code scales like a building. If there are cross context inconsistencies you know instantly instead of finding out about them in production. With the pattern you, as one developer, can do job of 100 developers and know everything will work together, hence, drastically reducing cost of the projects. Pattern is like having a building plan - todays imbecile developers build buildings without a plan! And Jira is not a plan, mkay?

And guess what? If you have well established meta executable it becomes trivial to develop with it. You need REST endpoint with the pattern? Add another row in the table of rest endpoints, define its signature, code gets generated to fill in the blank for the trivial typesafe implementation and you're done. And working in a feminine plane only you'll have much simpler job and hence, more people can do it, hence workforce for software development projects can be cheaper. And of course, leftist cuck wet dream or nightmare, more women can finally code in less understanding requiring feminine plane and get paid lower salary than few men who work in masculine plane which requires all the understanding of how everything works together, LMAO.

So this is why I don't view having a high IQ a very important thing. Once you start doing things with the pattern your life becomes so simple and trivial that you don't need to read whitepapers on how most hardcore locking primitives work. You just build abstractions that don't require them, because you see big picture earlier. That's why most leftist faglets, you can keep complaining about how women are attracted to masculine men and how nobody appreaciates your imaginary smarts in the complex abomination of a reality that you yourselves have created. And such leftist cucks will also not reproduce sufficiently in the long term and disappear from this earth themselves in due time. Complexity is not how nature works, it is simple, neither are we traveling around the sun (if you want a quick summary check this out https://www.youtube.com/watch?v=WffliCP2dU0 ), this is other thing pagan leftist sun worshipper cucks created in their mind to pervert and pollute mind about the complexities of nature which is designed simply and beautifully by the Master Builder of the bible.

Peace out bois.

#32

Natural Philosophy / The Image of God

Last post by CultLeader - June 20, 2021, 08:36:49 AMToday I will talk about masculinity and how it is necessarily related to divinity. After all, I've discussed in masculine and feminine planes thesis how the concept of sexes can explode individual developer's productivity at the right place and at the right time.

First of all, let's start that God created man in the image of God:

So God created man in his own image, in the image of God created he him; male and female created he them. - Genesis 1:27

And it says that man was created in the image of God, but not a woman. Okay, how to leverage this? Think of having a son and you really like software development. Wouldn't you be very proud of your son if you taught him to code like you do and he also became a rockstar developer? That would be a very good feeling for you. So, you'd appreciate if someone followed you in your footsteps, you'd be happy that someone resembles the image of you in their young age. You'd want to reward such son of following your footsteps. You could answer all his questions about coding. But, if your son deviates from you, and becomes someone like mechanic or a street cleaner, that would be fine but wouldn't be as ideal. You couldn't help them much in their specialty if it deviates from you.

So, in the same way there exist men that are all over the spectrum in resembling the image of God. At the very beginning of the spectrum you get feminine, weakling faggitty sissies. At the end of the spectrum you have someone like king Nebuchadnezzar, who brought the entire world to his knees.

The tree that thou sawest, which grew, and was strong, whose height reached unto the heaven, and the sight thereof to all the earth; Whose leaves were fair, and the fruit thereof much, and in it was meat for all; under which the beasts of the field dwelt, and upon whose branches the fowls of the heaven had their habitation: It is thou, O king, that art grown and become strong: for thy greatness is grown, and reacheth unto heaven, and thy dominion to the end of the earth. - Daniel 4:20-22

Just like God is the ruler of the heaven and earth, needless to say, man will always be lower than God in control but a man can still be a ruler within his limited scope.

Divine attributes

So, man's attributes determine how close he is to the image of God. Let's see some of the divine attributes of the Lord:

And the LORD brought us forth out of Egypt with a mighty hand, and with an outstretched arm, and with great terribleness, and with signs, and with wonders: - Deuteronomy 26:8

1. God is infinitely strong and mighty

O LORD, how manifold are thy works! in wisdom hast thou made them all: the earth is full of thy riches. - Psalms 104:24

To him that by wisdom made the heavens: for his mercy endureth for ever. - Psalms 136:5

2. God is infinitely wise

One thing have I desired of the LORD, that will I seek after; that I may dwell in the house of the LORD all the days of my life, to behold the beauty of the LORD, and to enquire in his temple. - Psalms 27:4

3. God is infinitely beautiful

And the LORD passed by before him, and proclaimed, The LORD, The LORD God, merciful and gracious, longsuffering, and abundant in goodness and truth, - Exodus 34:6

4. God is merciful

And I saw heaven opened, and behold a white horse; and he that sat upon him was called Faithful and True, and in righteousness he doth judge and make war. - Revelation 19:11

5. God is just and can make war

But the hour cometh, and now is, when the true worshippers shall worship the Father in spirit and in truth: for the Father seeketh such to worship him. - John 4:23

6. LORD seeks people that will worship him

And he hath on his vesture and on his thigh a name written, KING OF KINGS, AND LORD OF LORDS. - Revelation 19:16

7. LORD is the ultimate ruler

As a man if you implement God's attributes you will be rewarded with love of women and offspring of a traditional family.

For instance, if you are low in the looks department, you will never fully resemble the perfect image of God, but you can compensate by getting jacked in the gym. Also, women drool over intelligent men and praise them for that. Woman will choose to be with a man that is worthy of worship and will most closely resemble the image of God. That's why they try to get the ultimate best mate that they can, and men only look for a few things in woman. Sure, a bad boy may have only trait of being beautiful and ruling to a woman, and woman will naturally fall for that. But ultimately, bad boys generally don't create strong traditional families, for that ideally you need full package of the image of God and to be aware of all those traits to create a stable traditional family. Needless to say, you need wisdom to make money and not be dumpster diving. You need to be ruler of your family to prevent stupid nonsense like daughters wasting their precious virginity in drunk one night stand. You need to dispense judgement and chastise children correctly so they would get a sense of righteousness in a family. You also need to be in control of your woman so she would be happy, otherwise she will not consider you the lord worthy of worship and might run away to other men.

And the reward is the offspring, like promised to Abraham:

And I will make thy seed as the dust of the earth: so that if a man can number the dust of the earth, then shall thy seed also be numbered. - Genesis 13:16

As arrows are in the hand of a mighty man; so are children of the youth. Happy is the man that hath his quiver full of them: they shall not be ashamed, but they shall speak with the enemies in the gate. - Psalms 127:4-5

The entire red pill movement is based on what they say treating women like crap. The underlying reason is not that women need to be treated like crap all the time. The underlying reason why women are sexually attracted to that is that women know they can't be right, they are not as blessed in wisdom and understanding as men and they seek a man who can set them straight. A man determines rules for a woman, they can be evil or good but the women will follow them because they are wired to respond to judgement and shaming from her lord. Just like man ought to be drawn to worship someone who knows everything and understands everything, like the LORD.

The hatred of the image of God in the world

Now, the leftist cucks, the vast majority of the contingent of the software developers today despise the LORD and the image of God. They despise that women are not equal to men. They despise masculine figures that act like God, with might and judgement, i.e. Donald Trump. These faglets also despise the gym and cannot admit that women are attracted to strong muscular men. These faglets also despise wisdom and have none, are ever learning about worthless concepts but cannot come the truth, reality of the pattern and masculine and feminine planes:

Ever learning, and never able to come to the knowledge of the truth. - 2 Timothy 3:7

These are the fagglets who put rings on fingers of used up sluts when they squander all their youth and beauty on men who respect them the least. These are the cucks whose wifes cheat on them and they're the vast majority of the 30% of fathers globally who raise not their own children. That's why I'm so harsh to these people. These people are ignorant turd flinging monkeys. They cannot comprehend nor appreciate the divine design and its perfection. They neither are capable of engineering anything decent themselves neither they can teach others the correct way. Look at all the overengineered worthless garbage that is in the landscape of CNCF https://landscape.cncf.io/ . Hundreds if not thousands of projects of absolute insanity, where 99% of problems could be solved in a single codebase with the pattern. Every so few years new fads come and leave, like microservices, kubernetes, golang etc. that, once people practically find out that stuff doesn't work this way either, after wasting millions of dollars in overengineered designs they need to either hire hundreds more engineers to maintain all that mess or they have to rewrite everything from scratch again. There is an utter confusion of how to build anything if you don't have experience and greenhorn devs will pick the newest hottest "state of the art" overcomplicated garbage to achieve their goals. And the contractors are living in the infinite gold mine of overcomplicated unnecessary work.

There can only be two divisions, good vs evil. There are only two directions, say, being weak and being strong. Wise and unwise. Strict rules and discipline or a mess. Typesafety or dynamic typing. To go towards good, people need to strive to move to the good direction. Men ought want to be strong, wise and disciplined. Women ought to want to be chaste and submissive. Anything that goes against that is evil and ought to be shamed and scorned to maintain good. Get understanding and hate every false way.

Through thy precepts I get understanding: therefore I hate every false way. - Psalms 119:104

First of all, let's start that God created man in the image of God:

So God created man in his own image, in the image of God created he him; male and female created he them. - Genesis 1:27

And it says that man was created in the image of God, but not a woman. Okay, how to leverage this? Think of having a son and you really like software development. Wouldn't you be very proud of your son if you taught him to code like you do and he also became a rockstar developer? That would be a very good feeling for you. So, you'd appreciate if someone followed you in your footsteps, you'd be happy that someone resembles the image of you in their young age. You'd want to reward such son of following your footsteps. You could answer all his questions about coding. But, if your son deviates from you, and becomes someone like mechanic or a street cleaner, that would be fine but wouldn't be as ideal. You couldn't help them much in their specialty if it deviates from you.

So, in the same way there exist men that are all over the spectrum in resembling the image of God. At the very beginning of the spectrum you get feminine, weakling faggitty sissies. At the end of the spectrum you have someone like king Nebuchadnezzar, who brought the entire world to his knees.

The tree that thou sawest, which grew, and was strong, whose height reached unto the heaven, and the sight thereof to all the earth; Whose leaves were fair, and the fruit thereof much, and in it was meat for all; under which the beasts of the field dwelt, and upon whose branches the fowls of the heaven had their habitation: It is thou, O king, that art grown and become strong: for thy greatness is grown, and reacheth unto heaven, and thy dominion to the end of the earth. - Daniel 4:20-22

Just like God is the ruler of the heaven and earth, needless to say, man will always be lower than God in control but a man can still be a ruler within his limited scope.

Divine attributes

So, man's attributes determine how close he is to the image of God. Let's see some of the divine attributes of the Lord:

And the LORD brought us forth out of Egypt with a mighty hand, and with an outstretched arm, and with great terribleness, and with signs, and with wonders: - Deuteronomy 26:8

1. God is infinitely strong and mighty

O LORD, how manifold are thy works! in wisdom hast thou made them all: the earth is full of thy riches. - Psalms 104:24

To him that by wisdom made the heavens: for his mercy endureth for ever. - Psalms 136:5

2. God is infinitely wise

One thing have I desired of the LORD, that will I seek after; that I may dwell in the house of the LORD all the days of my life, to behold the beauty of the LORD, and to enquire in his temple. - Psalms 27:4

3. God is infinitely beautiful

And the LORD passed by before him, and proclaimed, The LORD, The LORD God, merciful and gracious, longsuffering, and abundant in goodness and truth, - Exodus 34:6

4. God is merciful

And I saw heaven opened, and behold a white horse; and he that sat upon him was called Faithful and True, and in righteousness he doth judge and make war. - Revelation 19:11

5. God is just and can make war

But the hour cometh, and now is, when the true worshippers shall worship the Father in spirit and in truth: for the Father seeketh such to worship him. - John 4:23

6. LORD seeks people that will worship him

And he hath on his vesture and on his thigh a name written, KING OF KINGS, AND LORD OF LORDS. - Revelation 19:16

7. LORD is the ultimate ruler

As a man if you implement God's attributes you will be rewarded with love of women and offspring of a traditional family.

For instance, if you are low in the looks department, you will never fully resemble the perfect image of God, but you can compensate by getting jacked in the gym. Also, women drool over intelligent men and praise them for that. Woman will choose to be with a man that is worthy of worship and will most closely resemble the image of God. That's why they try to get the ultimate best mate that they can, and men only look for a few things in woman. Sure, a bad boy may have only trait of being beautiful and ruling to a woman, and woman will naturally fall for that. But ultimately, bad boys generally don't create strong traditional families, for that ideally you need full package of the image of God and to be aware of all those traits to create a stable traditional family. Needless to say, you need wisdom to make money and not be dumpster diving. You need to be ruler of your family to prevent stupid nonsense like daughters wasting their precious virginity in drunk one night stand. You need to dispense judgement and chastise children correctly so they would get a sense of righteousness in a family. You also need to be in control of your woman so she would be happy, otherwise she will not consider you the lord worthy of worship and might run away to other men.

And the reward is the offspring, like promised to Abraham:

And I will make thy seed as the dust of the earth: so that if a man can number the dust of the earth, then shall thy seed also be numbered. - Genesis 13:16

As arrows are in the hand of a mighty man; so are children of the youth. Happy is the man that hath his quiver full of them: they shall not be ashamed, but they shall speak with the enemies in the gate. - Psalms 127:4-5

The entire red pill movement is based on what they say treating women like crap. The underlying reason is not that women need to be treated like crap all the time. The underlying reason why women are sexually attracted to that is that women know they can't be right, they are not as blessed in wisdom and understanding as men and they seek a man who can set them straight. A man determines rules for a woman, they can be evil or good but the women will follow them because they are wired to respond to judgement and shaming from her lord. Just like man ought to be drawn to worship someone who knows everything and understands everything, like the LORD.

The hatred of the image of God in the world

Now, the leftist cucks, the vast majority of the contingent of the software developers today despise the LORD and the image of God. They despise that women are not equal to men. They despise masculine figures that act like God, with might and judgement, i.e. Donald Trump. These faglets also despise the gym and cannot admit that women are attracted to strong muscular men. These faglets also despise wisdom and have none, are ever learning about worthless concepts but cannot come the truth, reality of the pattern and masculine and feminine planes:

Ever learning, and never able to come to the knowledge of the truth. - 2 Timothy 3:7

These are the fagglets who put rings on fingers of used up sluts when they squander all their youth and beauty on men who respect them the least. These are the cucks whose wifes cheat on them and they're the vast majority of the 30% of fathers globally who raise not their own children. That's why I'm so harsh to these people. These people are ignorant turd flinging monkeys. They cannot comprehend nor appreciate the divine design and its perfection. They neither are capable of engineering anything decent themselves neither they can teach others the correct way. Look at all the overengineered worthless garbage that is in the landscape of CNCF https://landscape.cncf.io/ . Hundreds if not thousands of projects of absolute insanity, where 99% of problems could be solved in a single codebase with the pattern. Every so few years new fads come and leave, like microservices, kubernetes, golang etc. that, once people practically find out that stuff doesn't work this way either, after wasting millions of dollars in overengineered designs they need to either hire hundreds more engineers to maintain all that mess or they have to rewrite everything from scratch again. There is an utter confusion of how to build anything if you don't have experience and greenhorn devs will pick the newest hottest "state of the art" overcomplicated garbage to achieve their goals. And the contractors are living in the infinite gold mine of overcomplicated unnecessary work.

There can only be two divisions, good vs evil. There are only two directions, say, being weak and being strong. Wise and unwise. Strict rules and discipline or a mess. Typesafety or dynamic typing. To go towards good, people need to strive to move to the good direction. Men ought want to be strong, wise and disciplined. Women ought to want to be chaste and submissive. Anything that goes against that is evil and ought to be shamed and scorned to maintain good. Get understanding and hate every false way.

Through thy precepts I get understanding: therefore I hate every false way. - Psalms 119:104

#33

Main Thesis / Part 7 - Whole is greater than...

Last post by CultLeader - June 10, 2021, 07:11:20 AMToday, I'll explain from another angle why the pattern and meta executable will always be greater than doing things by hand.

I love to use real life analogies. They are amazing, you can explain very advanced concepts using terms every day Joe can understand. After all, every decent system relies on universal principles. For instance, father of the household is like God to his family (why God calls himself Father? So we'd understand him with earthly analogies of what father is?). Country with king is also same as God and his followers. Every natural divine system follows principle of masculine, godlike control plane and feminine, submissive plane that simply enjoys the fruits godlike control plane that thought everything out logically to be used.

Leftist cucks, since they went without divine wisdom in engineering for a long time, only produced abominations ever since by deviating from this truth. Well, not necessarily deviating, but rather being ignorant and never aware of that. Relying on their own pathetic weasel understanding, producing needless complexity everywhere without end and extreme toils and hard work for future generations to come maintaining that garbage.

What you have now in typical software development world, if you need a database, someone deploys database and you use it. If you need queues, someone deploys kafka. You need them working together? You deploy some other garbage. There is simply no system to connect them all together, so that all would be working towards the same purpose.

Unless, there is a divine all knowing meta executable from the pattern that knows about all these relationships and enforces them

If you see the whole, not just parts separately, you can go wild. You can build extremely sophisticated abstractions that would otherwise be impossible. For instance, you can make sure database is reflected in clickhouse from postgres. Someone might say, "wait, doesn't debezium stack do that?". Yes it does, if you provide schema registries and all the dynamic garbage. Debezium stack will never check beforehand if the target table in clickhouse has the same schema is source postgres table. All this is left to a poor developer along with myriad of other runtime problems he will encounter in his lifetime.

You know what can easily check if such abstractions are valid? A meta executable that knows the following:

Not only that, we can generate the following:

Possibilities are endless, because we have all information about everything as data in meta executable. With this approach we have a full body at our control, a body, that can use all of its muscles to perform single coordinated action, like striking a blow with a sword, do a pushup or dance ballet. The choice is ours. Did you notice, how seamlessly our bodies, under normal conditions, can make all the muscles perform unified action according to one mind? Or how unified the army marches? This is a divine pattern - everything is connected and coordinated to perform the same purpose.

The bodies of software development today work like this. An arm gets told to scratch the ass. The arm goes up to the ass and sees the ass is sitting. The arm goes to head to ask permission for ass to stand up. Permission granted, ass raises up. Hand finally goes to ass and scratches it. Imagine how bizarre this looks in your head - this is not normal. This is nonsense, paganism. All of these problems are trivial to resolve for a greenhorn developer if all the systems are declared as data in one place. Yet they create all sorts of nonsense these days, dynamic schema registration, separate schema evolutions in database, separate applications written to map stream to stream with no typesafe enforcement. Its extremely limited, because you inevitably stumble into the dreaded sentence "I don't know what schemas are in that and that subsystem, so, I'll use generic json and burden CPU with needless generic json parsing".

Things could be so much better than that. As I wrote in assumptions post, the more assumptions you make, the simpler your solutions will be. And the ONLY WAY to make these assumptions is to have all the data about your infrastructure in one place. Just like kingdom cannot be divided and stand, so is information about your entire project. If it is divided, there are bound to be conflicts and confusions. But if it is united, all in one place, and checked for consistency on a very high level - such project can stand.

And if a kingdom be divided against itself, that kingdom cannot stand. - Mark 3:24

Conclusion: software development paganism, division and confusion is cancer and causes huge complexities in software projects. Divided projects without strong interconnections between them are summed parts. Whole, where all your infrastructure is defined as data in one place is greater than sum of its parts and opens unlimited possibilities for correctness checking and code generation.

I love to use real life analogies. They are amazing, you can explain very advanced concepts using terms every day Joe can understand. After all, every decent system relies on universal principles. For instance, father of the household is like God to his family (why God calls himself Father? So we'd understand him with earthly analogies of what father is?). Country with king is also same as God and his followers. Every natural divine system follows principle of masculine, godlike control plane and feminine, submissive plane that simply enjoys the fruits godlike control plane that thought everything out logically to be used.

Leftist cucks, since they went without divine wisdom in engineering for a long time, only produced abominations ever since by deviating from this truth. Well, not necessarily deviating, but rather being ignorant and never aware of that. Relying on their own pathetic weasel understanding, producing needless complexity everywhere without end and extreme toils and hard work for future generations to come maintaining that garbage.

What you have now in typical software development world, if you need a database, someone deploys database and you use it. If you need queues, someone deploys kafka. You need them working together? You deploy some other garbage. There is simply no system to connect them all together, so that all would be working towards the same purpose.

Unless, there is a divine all knowing meta executable from the pattern that knows about all these relationships and enforces them

If you see the whole, not just parts separately, you can go wild. You can build extremely sophisticated abstractions that would otherwise be impossible. For instance, you can make sure database is reflected in clickhouse from postgres. Someone might say, "wait, doesn't debezium stack do that?". Yes it does, if you provide schema registries and all the dynamic garbage. Debezium stack will never check beforehand if the target table in clickhouse has the same schema is source postgres table. All this is left to a poor developer along with myriad of other runtime problems he will encounter in his lifetime.

You know what can easily check if such abstractions are valid? A meta executable that knows the following:

- What kafka clusters are running

- What databases and what tables are declared in postgres

- What databases and what tables are declared in clickhouse

- Postgres and clickhouse and wanted kafka instances exist

- Postgres schema matches clickhouse schema

Not only that, we can generate the following:

- Any remap from any row with ends up in clickhouse

- Possibly remap to another typesafe kafka topic with custom typesafe code

- Maybe use clickhouse materialized views to generate another realtime stream to kafka topic

Possibilities are endless, because we have all information about everything as data in meta executable. With this approach we have a full body at our control, a body, that can use all of its muscles to perform single coordinated action, like striking a blow with a sword, do a pushup or dance ballet. The choice is ours. Did you notice, how seamlessly our bodies, under normal conditions, can make all the muscles perform unified action according to one mind? Or how unified the army marches? This is a divine pattern - everything is connected and coordinated to perform the same purpose.

The bodies of software development today work like this. An arm gets told to scratch the ass. The arm goes up to the ass and sees the ass is sitting. The arm goes to head to ask permission for ass to stand up. Permission granted, ass raises up. Hand finally goes to ass and scratches it. Imagine how bizarre this looks in your head - this is not normal. This is nonsense, paganism. All of these problems are trivial to resolve for a greenhorn developer if all the systems are declared as data in one place. Yet they create all sorts of nonsense these days, dynamic schema registration, separate schema evolutions in database, separate applications written to map stream to stream with no typesafe enforcement. Its extremely limited, because you inevitably stumble into the dreaded sentence "I don't know what schemas are in that and that subsystem, so, I'll use generic json and burden CPU with needless generic json parsing".

Things could be so much better than that. As I wrote in assumptions post, the more assumptions you make, the simpler your solutions will be. And the ONLY WAY to make these assumptions is to have all the data about your infrastructure in one place. Just like kingdom cannot be divided and stand, so is information about your entire project. If it is divided, there are bound to be conflicts and confusions. But if it is united, all in one place, and checked for consistency on a very high level - such project can stand.

And if a kingdom be divided against itself, that kingdom cannot stand. - Mark 3:24

Conclusion: software development paganism, division and confusion is cancer and causes huge complexities in software projects. Divided projects without strong interconnections between them are summed parts. Whole, where all your infrastructure is defined as data in one place is greater than sum of its parts and opens unlimited possibilities for correctness checking and code generation.

#34

My Rants / The real assholes of software ...

Last post by CultLeader - June 06, 2021, 09:39:29 AMToday, most software developers are delusional, low testosterone, pussified people. These people cannot hear anything negative about their programming language, their beliefs, design patterns and so on. Doe-eyed cucks today usually are more keen to accept an incompetent pussified faglet as a leader, that has no deep technical skill, no decent logical insights, but is rather smiling and diplomatic and would never hurt anyones feelings.

This is disaster, because every improvement to our modern society was made when someone noticed that current things are subpar and need to be changed. One of the reason for this mental illness in sillicon valley is probably due to fact that nobody is measuring productivity of separate developers and nobody asks why some developer does things 10x while others linger for weeks on the same task. Even if reason is given, if you're around ruby monkeys, and you say "typesafety is amazing, I don't need to spend that much time debugging code and can focus on the problem", the usual knee-jerk irrational reaction is "we write tests so we have the same". No you don't, outcome of two different methodologies can never be equal as I've discussed in world of inequality thesis.

So you get a huge array of different methodologies of doing things wrong. In media it is beneficial, for instance, if you were youtube, you'll get bigger audience by servicing 100 ways to do things wrong. It's just more content. The more content you have, the more imaginary value. But, if you go by the way of simplicity, saying there is one right way to do things and the rest are a waste of time (like I teach with the pattern), then, all of a sudden you become enemy of all the teachers that teach 100 wrong ways to do things, with your clearly superior solution.

You see, natural gas companies don't fight oil companies, they both can make a living selling fuel. What they all unify together against is countless of inventors, like Steven Meyer, who invented water ran car https://www.youtube.com/watch?v=staL1wr07Sg . These companies quickly smell that they can become obsolete in an eye blink and kill or persecute the inventors.

Same with programming languages, if you state that python/ruby/javascript are unproductive, unmaintainable abominations due to dynamic typing and nobody should use them to implement any reasonably complex project, you become an enemy. All the false teachers, that teach nonsense about design patterns, domain driven development or what not, quickly become enraged when any other superior way to do things is shown. Nevertheless, people who seek wisdom and love righteousness will carry the truth in their heart.

This is the same thing when Jesus said, the world would not accept you.

If ye were of the world, the world would love his own: but because ye are not of the world, but I have chosen you out of the world, therefore the world hateth you. - John 15:19

Any idiot can go with the flow, like microservices are the thing, like kubernetes is overtaking the industry, like golang, despite being an utter trash is getting all the tooling. Any idiot can nod head and agree with anyone else. But it takes wisdom, wisdom of the minority who can judge in truth. Who understand consequences and implications. Who took the beating and wrote hundreds of thousands of lines of code and know what works and what doesn't. Any greenhorn that just comes out of university has his mind instantly polluted with complicated patterns and impractical technology. People in university are taught that there is java and that there is javascript, but there is no judgement that comes with it, that one is typesafe, and can be used to write millions of lines of code, and other is for weekend projects.

Are people expected to come to such judgements on their own? But how can they, without hingsight? So, they are doomed to wonder from cult to cult, without having a right way. They may do java with xmls for a few years in a bank, then, other cultist introduces them to ruby and how little code they need to write. Ruby cultist doesn't introduce them to problems that their production rails app is full of undefined method errors, which could be checked by a compiler in statically typed language. They don't say to them that they waste half their time writing unit tests. Then the new cultist rubyist either gets stuck in ruby, and assumes it is normal to push few other commits to production to fix undefined variable errors and accepts this as a norm. Or a more astute one will think "statically typed languages don't have this problem". Even if they do figure it out, they wasted years of precious time getting there and they will never get it back.

Good thing such things as this forum exist where with the pattern you get to the ultimate end solution that can solve everything

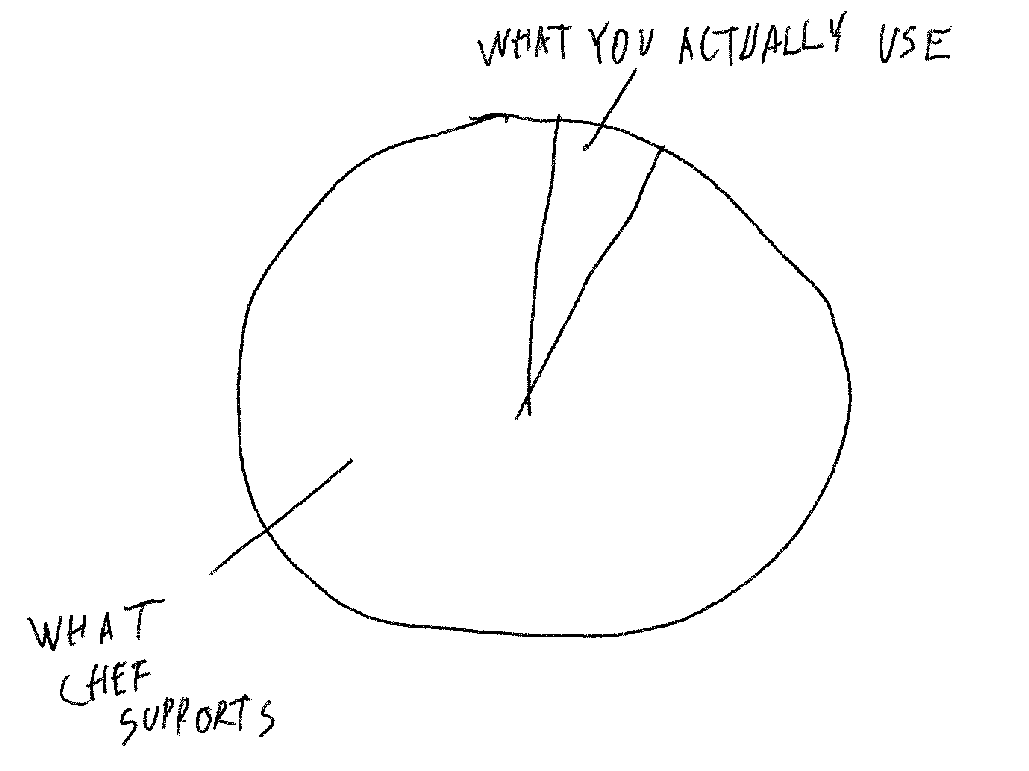

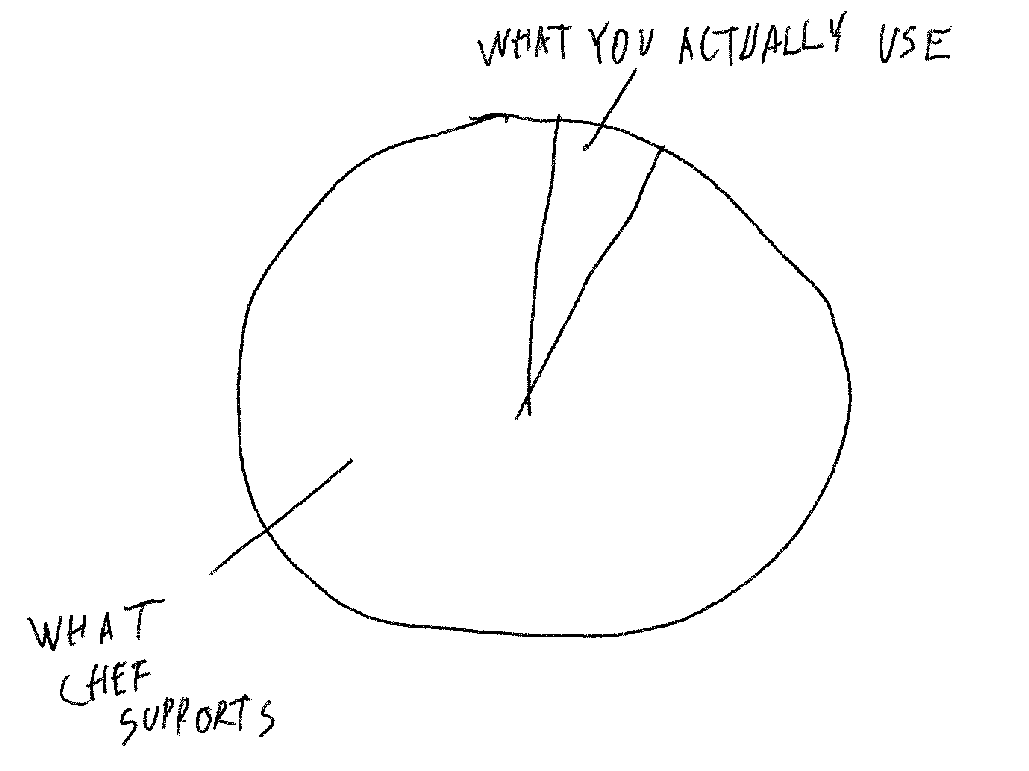

But you see, I'm the asshole. I'm the bad guy. I'm in the wrong, because I say dynamically typed languages are a waste of time. I'm in the wrong because I can offer solutions of how to have typesafe SQL and knowing it will work before production. I'm the asshole, because I say current infra management tools like chef are an abomination that are very fragile and waste everyone's time. I'm the asshole because I say yamls are worthless and can be replaced with typesafe programming language to encode any configuration you want. I'm the asshole because I say what most other people know about software development is overcomplicated nonsense. I'm the asshole because I know how to do things much more productively, saving everyones time and money and likely hiring much fewer devs to achieve the same result.

Well, as history shown time and time again, even if you supress solutions to problems long enough, they'll come out sooner or later to the surface.

For nothing is secret, that shall not be made manifest; neither any thing hid, that shall not be known and come abroad. - Luke 8:17

And when everyone finally realizes, all this time emperor had no clothes it will be like the dam breaking upon everyone. But some people just hate reality and hate the truth - this is the way of the world and it will always be.

This is disaster, because every improvement to our modern society was made when someone noticed that current things are subpar and need to be changed. One of the reason for this mental illness in sillicon valley is probably due to fact that nobody is measuring productivity of separate developers and nobody asks why some developer does things 10x while others linger for weeks on the same task. Even if reason is given, if you're around ruby monkeys, and you say "typesafety is amazing, I don't need to spend that much time debugging code and can focus on the problem", the usual knee-jerk irrational reaction is "we write tests so we have the same". No you don't, outcome of two different methodologies can never be equal as I've discussed in world of inequality thesis.

So you get a huge array of different methodologies of doing things wrong. In media it is beneficial, for instance, if you were youtube, you'll get bigger audience by servicing 100 ways to do things wrong. It's just more content. The more content you have, the more imaginary value. But, if you go by the way of simplicity, saying there is one right way to do things and the rest are a waste of time (like I teach with the pattern), then, all of a sudden you become enemy of all the teachers that teach 100 wrong ways to do things, with your clearly superior solution.

You see, natural gas companies don't fight oil companies, they both can make a living selling fuel. What they all unify together against is countless of inventors, like Steven Meyer, who invented water ran car https://www.youtube.com/watch?v=staL1wr07Sg . These companies quickly smell that they can become obsolete in an eye blink and kill or persecute the inventors.

Same with programming languages, if you state that python/ruby/javascript are unproductive, unmaintainable abominations due to dynamic typing and nobody should use them to implement any reasonably complex project, you become an enemy. All the false teachers, that teach nonsense about design patterns, domain driven development or what not, quickly become enraged when any other superior way to do things is shown. Nevertheless, people who seek wisdom and love righteousness will carry the truth in their heart.

This is the same thing when Jesus said, the world would not accept you.

If ye were of the world, the world would love his own: but because ye are not of the world, but I have chosen you out of the world, therefore the world hateth you. - John 15:19

Any idiot can go with the flow, like microservices are the thing, like kubernetes is overtaking the industry, like golang, despite being an utter trash is getting all the tooling. Any idiot can nod head and agree with anyone else. But it takes wisdom, wisdom of the minority who can judge in truth. Who understand consequences and implications. Who took the beating and wrote hundreds of thousands of lines of code and know what works and what doesn't. Any greenhorn that just comes out of university has his mind instantly polluted with complicated patterns and impractical technology. People in university are taught that there is java and that there is javascript, but there is no judgement that comes with it, that one is typesafe, and can be used to write millions of lines of code, and other is for weekend projects.

Are people expected to come to such judgements on their own? But how can they, without hingsight? So, they are doomed to wonder from cult to cult, without having a right way. They may do java with xmls for a few years in a bank, then, other cultist introduces them to ruby and how little code they need to write. Ruby cultist doesn't introduce them to problems that their production rails app is full of undefined method errors, which could be checked by a compiler in statically typed language. They don't say to them that they waste half their time writing unit tests. Then the new cultist rubyist either gets stuck in ruby, and assumes it is normal to push few other commits to production to fix undefined variable errors and accepts this as a norm. Or a more astute one will think "statically typed languages don't have this problem". Even if they do figure it out, they wasted years of precious time getting there and they will never get it back.

Good thing such things as this forum exist where with the pattern you get to the ultimate end solution that can solve everything

But you see, I'm the asshole. I'm the bad guy. I'm in the wrong, because I say dynamically typed languages are a waste of time. I'm in the wrong because I can offer solutions of how to have typesafe SQL and knowing it will work before production. I'm the asshole, because I say current infra management tools like chef are an abomination that are very fragile and waste everyone's time. I'm the asshole because I say yamls are worthless and can be replaced with typesafe programming language to encode any configuration you want. I'm the asshole because I say what most other people know about software development is overcomplicated nonsense. I'm the asshole because I know how to do things much more productively, saving everyones time and money and likely hiring much fewer devs to achieve the same result.

Well, as history shown time and time again, even if you supress solutions to problems long enough, they'll come out sooner or later to the surface.

For nothing is secret, that shall not be made manifest; neither any thing hid, that shall not be known and come abroad. - Luke 8:17

And when everyone finally realizes, all this time emperor had no clothes it will be like the dam breaking upon everyone. But some people just hate reality and hate the truth - this is the way of the world and it will always be.

#35

Main Thesis / Part 6 - Assumptions

Last post by CultLeader - June 04, 2021, 10:19:20 AMI want to talk about simple fact that permeates through all of life. And it is illustrated below.

The more assumptions you make the simpler code can be. The less assumptions you have the more complex code is.

Consider two solutions to a function that reads a file to string of bytes. For me such function is a phenomenon. Many programming languages, like Java, did not have a single function that can read all file contents to byte string back in the day. You have to use apache commons jar.

Why is that? Such functions make development much easier. Many answers in stack overflow suggest creating buffered reader and then spinning a loop https://stackoverflow.com/questions/731365/reading-and-displaying-data-from-a-txt-file , why all this complexity?

The knee jerk reaction to this is the usual saying "well, what if the file is 100 exabytes, you couldn't fit such file in memory". That is true, we could not read exabyte file into memory. But how often do we do that? If we need to manage that much data in a single file, SQLite is a better option anyway. But the files that we usually need to read, say, source file or a secret, or a json file - they are usually tiny. Why do we complicate 99% of the cases where the file is indeed small and function to read it can be super simple with a needless abstraction of reading it with stream just in case it is exabytes?

Reading a stream is a generic (there is that evil word again), low level, complex solution. If solution is specific to small files then it is very simple - just a single function, read all file into memory and be done. Another thing, Java cannot assume file is ASCII. So, you have to convert that certain stream of bytes via UTF-8 encoding into java's string, which is ironically two bytes. What if we eliminate that assumption too? UTF-8 is universally supported format, we can make our read file to string function simpler again.

You see how we made two assumptions and our problem and solution became simpler?

Infra management

Current software developers world is full of such complexities due to lack of assumptions (lack of information). For instance, in infra management tool Chef, which I utterly disrespect and I think every company is better off writing their own in OCaml, there are folders for files and templates. Every chef cookbook has a default folder, then could have windows/linux/solaris and what not folder for specific templates to that platform. What if company only uses specific version of linux? All that becomes useless complexity. Since chef is a generic, used up, smurfed out, whorish solution that kinda does a crappy job at everything, which is jack of all trades, master of none, it also brings all the complexity of every hipster company into one project for everyone to bear. Same with kubernetes complexity.

I heard arguments saying "Well, would you rewrite chef yourself? You know how many man hours were put into that thing?". Yes, I would. I would implement the part I need below

This is the assumption I would make in the infrastructure:

1. Use certain version of debian

2. All nodes must have docker installed

3. Any component that is needed, postgres/kafka/clickhouse/prometheus - you name it, is installed via docker

What I don't need to implement from chef this way in my OCaml infra management tool (which I did implement for myself by the way):

1. Support for any other OS but linux debian

2. Adding cerain debian package repositories of, say, newest postgres - just run that in docker

3. All the kitchen insanity, I'd turn component configs into data and analyze the data of configs instead using the pattern

What I'd improve upon:

1. Maybe implement iptables if I wanted to restrict who talks with that

2. Typesafe roles, unlike raw jsons that chef uses, with which it is very easy to make a typo that will end up in production

3. Implement typesafe, no nulls allowed function of how to install generated infra file to filesystem

4. Implement systemd functions, to, say, install certain systemd unit for things like vector to collect logs

Infra management tools ought to be a child's play. They have nothing inherently complex about them, just generate some files and write them into filesystem, maybe restart systemd service or spinup docker container with mounted directories. These things will never be as complex as, say, doing 3d vector maths for a game or development of relational database or programming language. Yet people who write infra management tools, since their task is so simple and they want to pretend that its not, they have to needlessly complicate things for themselves by making what they write as generic as possible for every use case under the sun, hence dragging along huge complexities to otherwise very simple software.

You see, you don't get the best and most ingenious people to work on tools that write some files to filesystem, spin up few systemd services and run a few docker containers. Just like we discussed in earlier post, that nothing is equal, it is also not equal the brilliance of developers that work on different problems in the industry. Most brilliant devs are likely working on games, databases, programming languages, theorem provers and so on. This is where the smartest minds are at. And in web development you have much lower quality people, where brilliance is not a concern for generating web pages while querying database. Same with frontend and same with infra management tools.

Just the fact, that there are really only three choices infra management today, namely Chef, Puppet and Ansible, and two of them are developed in Ruby, Ansible in python - that speaks volumes of how lowly esteemed and underdeveloped and uncompetitive that niche is. Infra management is the Africa of software development. Writing code, that could potentially bring down hundreds of machines in production to a standstill, with a language, which you MUST RUN in order to see if your code works - that's a recipe for disaster. And proponents of such tools are sufferers of a deep mental disorder. Usage of raw jsons to describe roles, where you can make any sort of mistake, instead of typesafe struct in programming language like OCaml, where illegal cases are mostly irrepresentable, is absurd.

Conclusions

The more assumptions you make for specific solution you want to implement, the simpler your solution will be. You don't always have to fear "such and such pile of crap is developed 10 years - we could not implement it ourselves" statement. Sure, I wouldn't implement something like Postgres on my own, it is a very powerful database that can cover anything you need. But such weekend projects as Chef, that don't really do anything at all besides writing files to filesystem, it is much easier to just implement trivial solution by yourself ( with, say, rock solid typesafety of OCaml and the pattern ) and only implement the features that you actually need. And overall you will have very simple specific code that will be easy to maintain and will not have all the Chef complexity in it too.

) and only implement the features that you actually need. And overall you will have very simple specific code that will be easy to maintain and will not have all the Chef complexity in it too.

The more assumptions you make the simpler code can be. The less assumptions you have the more complex code is.

Consider two solutions to a function that reads a file to string of bytes. For me such function is a phenomenon. Many programming languages, like Java, did not have a single function that can read all file contents to byte string back in the day. You have to use apache commons jar.

Why is that? Such functions make development much easier. Many answers in stack overflow suggest creating buffered reader and then spinning a loop https://stackoverflow.com/questions/731365/reading-and-displaying-data-from-a-txt-file , why all this complexity?

The knee jerk reaction to this is the usual saying "well, what if the file is 100 exabytes, you couldn't fit such file in memory". That is true, we could not read exabyte file into memory. But how often do we do that? If we need to manage that much data in a single file, SQLite is a better option anyway. But the files that we usually need to read, say, source file or a secret, or a json file - they are usually tiny. Why do we complicate 99% of the cases where the file is indeed small and function to read it can be super simple with a needless abstraction of reading it with stream just in case it is exabytes?

Reading a stream is a generic (there is that evil word again), low level, complex solution. If solution is specific to small files then it is very simple - just a single function, read all file into memory and be done. Another thing, Java cannot assume file is ASCII. So, you have to convert that certain stream of bytes via UTF-8 encoding into java's string, which is ironically two bytes. What if we eliminate that assumption too? UTF-8 is universally supported format, we can make our read file to string function simpler again.

You see how we made two assumptions and our problem and solution became simpler?

Infra management

Current software developers world is full of such complexities due to lack of assumptions (lack of information). For instance, in infra management tool Chef, which I utterly disrespect and I think every company is better off writing their own in OCaml, there are folders for files and templates. Every chef cookbook has a default folder, then could have windows/linux/solaris and what not folder for specific templates to that platform. What if company only uses specific version of linux? All that becomes useless complexity. Since chef is a generic, used up, smurfed out, whorish solution that kinda does a crappy job at everything, which is jack of all trades, master of none, it also brings all the complexity of every hipster company into one project for everyone to bear. Same with kubernetes complexity.

I heard arguments saying "Well, would you rewrite chef yourself? You know how many man hours were put into that thing?". Yes, I would. I would implement the part I need below

This is the assumption I would make in the infrastructure:

1. Use certain version of debian

2. All nodes must have docker installed

3. Any component that is needed, postgres/kafka/clickhouse/prometheus - you name it, is installed via docker

What I don't need to implement from chef this way in my OCaml infra management tool (which I did implement for myself by the way):

1. Support for any other OS but linux debian

2. Adding cerain debian package repositories of, say, newest postgres - just run that in docker

3. All the kitchen insanity, I'd turn component configs into data and analyze the data of configs instead using the pattern

What I'd improve upon:

1. Maybe implement iptables if I wanted to restrict who talks with that

2. Typesafe roles, unlike raw jsons that chef uses, with which it is very easy to make a typo that will end up in production

3. Implement typesafe, no nulls allowed function of how to install generated infra file to filesystem

4. Implement systemd functions, to, say, install certain systemd unit for things like vector to collect logs

Infra management tools ought to be a child's play. They have nothing inherently complex about them, just generate some files and write them into filesystem, maybe restart systemd service or spinup docker container with mounted directories. These things will never be as complex as, say, doing 3d vector maths for a game or development of relational database or programming language. Yet people who write infra management tools, since their task is so simple and they want to pretend that its not, they have to needlessly complicate things for themselves by making what they write as generic as possible for every use case under the sun, hence dragging along huge complexities to otherwise very simple software.

You see, you don't get the best and most ingenious people to work on tools that write some files to filesystem, spin up few systemd services and run a few docker containers. Just like we discussed in earlier post, that nothing is equal, it is also not equal the brilliance of developers that work on different problems in the industry. Most brilliant devs are likely working on games, databases, programming languages, theorem provers and so on. This is where the smartest minds are at. And in web development you have much lower quality people, where brilliance is not a concern for generating web pages while querying database. Same with frontend and same with infra management tools.

Just the fact, that there are really only three choices infra management today, namely Chef, Puppet and Ansible, and two of them are developed in Ruby, Ansible in python - that speaks volumes of how lowly esteemed and underdeveloped and uncompetitive that niche is. Infra management is the Africa of software development. Writing code, that could potentially bring down hundreds of machines in production to a standstill, with a language, which you MUST RUN in order to see if your code works - that's a recipe for disaster. And proponents of such tools are sufferers of a deep mental disorder. Usage of raw jsons to describe roles, where you can make any sort of mistake, instead of typesafe struct in programming language like OCaml, where illegal cases are mostly irrepresentable, is absurd.

Conclusions

The more assumptions you make for specific solution you want to implement, the simpler your solution will be. You don't always have to fear "such and such pile of crap is developed 10 years - we could not implement it ourselves" statement. Sure, I wouldn't implement something like Postgres on my own, it is a very powerful database that can cover anything you need. But such weekend projects as Chef, that don't really do anything at all besides writing files to filesystem, it is much easier to just implement trivial solution by yourself ( with, say, rock solid typesafety of OCaml and the pattern

) and only implement the features that you actually need. And overall you will have very simple specific code that will be easy to maintain and will not have all the Chef complexity in it too.

) and only implement the features that you actually need. And overall you will have very simple specific code that will be easy to maintain and will not have all the Chef complexity in it too. #36

Main Thesis / Part 5 - The World of Inequali...

Last post by CultLeader - June 03, 2021, 10:40:49 AMEarlier I have mentioned how I cannot stand the attitude that "every programming language is equal, but some are better for some things and some for others". Same with databases, infra management tools and you name it. Today we'll debunk this leftist cuck nonsense.

Humans

Consider every human and his attributes. Their height, their weight, how smelly they are, metabolism etc. All are decided by genes. So, if we just take one dimension of their height, does it make sense to categorize people among height? By centimeters? But there are tons of people that would be considered in the same centimeter of height, yet by milimeters they are still different height. So, height is a spectrum. There is not equality on all people by their height. If we picked two people randomly from this earth and I had to make a bet "are they the same height?" - I'd always bet against it, that they will be different height and be right 99% of the time.

Same with other dimensions, like weight, how smelly they are - all people are very different based on these dimensions. So, on single dimensions separately people are very different.

Now lets take all these attributes of single people and say that every person is described by an array of scores for each their attribute, from 0.0 to 1.0.

What are the odds that we will find equal rows in such tables with infinite attributes? Practically zero for every person on this earth?

Okay, now, consider we need to evaluate person on certain attributes being fit or unfit for the same job. Say, we need to evaluate basketball, and we pick few dimensions we need for people.

And the sum of these attributes in float space would be the suitability of this person performing well in the basketball arena. What are the odds of summing these attributes being equal between candidates? Next to none?

So, we clearly see that humans are exceedingly unlikely to be equal amongst themselves either in one dimension attributes, in all their attributes or in specific job that they need to do attributes

Programming languages

Okay, let's apply the same logic for programming languages, with each attribute being 0.0 to 1.0, 1.0 being the best and 0.0 being the worst (all the values are my opinions)

What are the odds of these ever being equal among different programming languages? Some language will have to come over the top and have the biggest score.

As you can see, overall Rust somewhat overtook OCaml. But that assumes weights are equal, that is, I value all the properties equally in this sum. Let's see what I believe the weights are for each attribute from 0.0 to 1.0, as weights themselves aren't equal either. Same logic applies to weights of importance - these cannot be equal either!

As you can see, typesafety is paramount as I'm using the pattern in my projects. Consiceness, it should be decent, but perfect score is not necessary, it shouldn't be as bad as Java is all I'm asking. Syntax sugar, since I'm using the pattern and I can get as much sugar as I want is completely irrelevant. Performance, it should be decent, but not of paramount importance. Memory usage also should be decent as I want to get most out of cheap boxes, I don't want to have big beefy machines just so I could run memory hungry JVMs. Metaprogamming capabilities also are irrelevant as I'm using the pattern.

So, how about we multiply the weights with languages and see the final scores that we get?

And, as you can see, once the attributes which I care about most are weighted OCaml comes out as a clear winner, with Rust being second choice. Ruby and Lisp become utterly irrelevant, since they have no typesafety and hence cannot be used to implement the pattern. No language is equal either.

Same can be done with databases, infrastructure management solutions, IDE's - you name it. None of the outcomes of such analysis can be equal. Your job is to find the best one. And I don't mean "the best one for such and such usecase", I mean "the best one"

Next time some leftist cuck says to you "I develop NodeJS and my code is as solid as what you do in OCaml because I spend double the development time writing muh precious unit tests" - refer such idiot to this forum post

Humans

Consider every human and his attributes. Their height, their weight, how smelly they are, metabolism etc. All are decided by genes. So, if we just take one dimension of their height, does it make sense to categorize people among height? By centimeters? But there are tons of people that would be considered in the same centimeter of height, yet by milimeters they are still different height. So, height is a spectrum. There is not equality on all people by their height. If we picked two people randomly from this earth and I had to make a bet "are they the same height?" - I'd always bet against it, that they will be different height and be right 99% of the time.

Same with other dimensions, like weight, how smelly they are - all people are very different based on these dimensions. So, on single dimensions separately people are very different.

Now lets take all these attributes of single people and say that every person is described by an array of scores for each their attribute, from 0.0 to 1.0.

Code Select

| name | height | weight | smelliness |

|-------+--------+--------+------------|

| alice | 0.6 | 0.4 | 0.8 |

| bob | 0.8 | 0.6 | 0.5 |What are the odds that we will find equal rows in such tables with infinite attributes? Practically zero for every person on this earth?

Okay, now, consider we need to evaluate person on certain attributes being fit or unfit for the same job. Say, we need to evaluate basketball, and we pick few dimensions we need for people.

Code Select

| name | height | weight | speed | accuracy | quick thinking |

|-------+--------+--------+-------+----------+----------------|

| bob | 1.0 | 0.5 | 0.3 | 0.1 | 0.2 |

| roy | 0.8 | 0.9 | 0.1 | 0.7 | 0.4 |

| dylan | 0.8 | 0.4 | 0.9 | 0.9 | 0.8 |And the sum of these attributes in float space would be the suitability of this person performing well in the basketball arena. What are the odds of summing these attributes being equal between candidates? Next to none?